AWS SAM with build-in CodeDeploy: Deploying serverless applications gradually

- Deploys new versions of your Lambda function, and automatically creates aliases that point to the new version.

- Gradually shifts customer traffic to the new version until you’re satisfied that it’s working as expected, or you roll back the update.

- Defines pre-traffic and post-traffic test functions to verify that the newly deployed code is configured correctly and your application operates as expected.

- Rolls back the deployment if CloudWatch alarms are triggered.

Use AWS CloudFormation StackSets to deploy to multiple accounts/regions

Concepts

StackSets

A stack set lets you create stacks in AWS accounts across regions by using a single AWS CloudFormation template.

Stack Instance

A stack instance is a reference to a stack in a target account within a Region.

Permissions models for stack sets

self-managed permissions

- You create the IAM roles required by StackSets to deploy across accounts and Regions

- Need trusted relationship between the account you’re administering the stack set from and the account you’re deploying stack instances to.

service-managed permissions

- Deploy stacks into Organization.

- Supports automatic deployment.

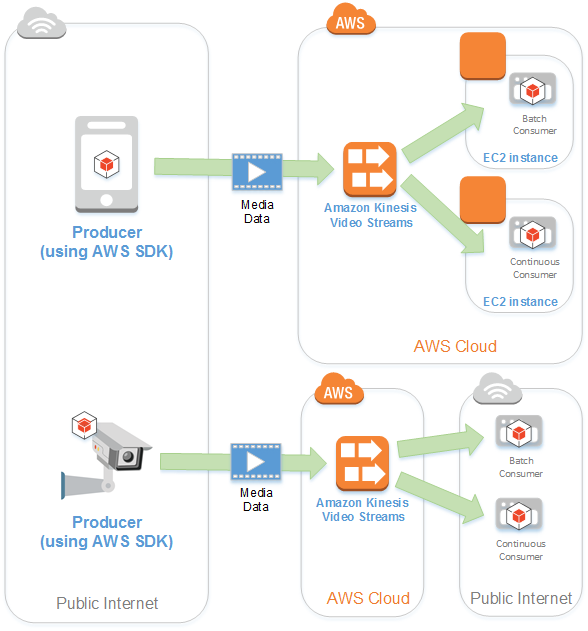

Amazon Kinesis Video Streams

Kinesis Video Streams Producer libraries

A set of easy-to-use software and libraries that you can install and configure on your devices. These libraries make it easy to securely connect and reliably stream video in different ways, including in real time, after buffering it for a few seconds, or as after-the-fact media uploads.

Consumer

- Gets data, such as fragments and frames, from a Kinesis video stream to view, process, or analyze it. Generally these consumers are called Kinesis Video Streams applications.

- You can write applications that consume and process data in Kinesis video streams in real time, or after the data is durably stored and time-indexed when low latency processing is not required.

- Kinesis Video Streams does not support putting data into S3 “out-of-the-box”.

Associating an Amazon VPC and a private hosted zone from different AWS accounts

- You need to use CLI, SDK or Route 53 API.

- To associate a Route 53 private hosted zone in one AWS account (Account A) with a virtual private cloud that belongs to another AWS account (Account B):

- Connect to an EC2 instance in Account A.

- Update CLI version.

- Run this command to list the available hosted zones in Account A. Note the hosted zone ID in Account A that you will associate with Account B.

aws route53 list-hosted-zones - Run the following command to authorize the association between the private hosted zone in Account A and the virtual private cloud in Account B. Use the hosted zone ID from the previous step, as well as the Region and ID of the virtual private cloud in Account B.

aws route53 create-vpc-association-authorization --hosted-zone-id <hosted-zone-id> --vpc VPCRegion=<region>,VPCId=<vpc-id> - Connect to an EC2 instance in Account B.

- Run the following command to create the association between the private hosted zone in Account A and the virtual private cloud in Account B. Use the hosted zone ID from step #3, as well as the Region and ID of the virtual private cloud in Account B.

aws route53 associate-vpc-with-hosted-zone --hosted-zone-id <hosted-zone-id> --vpc VPCRegion=<region>,VPCId=<vpc-id> - It’s a best practice to delete the association authorization after the association is created. Doing this prevents you from recreating the same association later. To delete the authorization, reconnect to an EC2 instance in Account A. Then, run this command:

aws route53 delete-vpc-association-authorization --hosted-zone-id <hosted-zone-id> --vpc VPCRegion=<region>,VPCId=<vpc-id>

Use Lambda@Edge to authorize request before it reaches origin infrastructure

You cannot cache authorization.

5. Lambda@Edge decodes the JWT and checks if the user belongs to the correct Cognito User Pool. It also verifies the cryptographic signature using the public RSA key for Cognito User Pool. Crypto verification ensures that JWT was created by the trusted party.

5. Lambda@Edge decodes the JWT and checks if the user belongs to the correct Cognito User Pool. It also verifies the cryptographic signature using the public RSA key for Cognito User Pool. Crypto verification ensures that JWT was created by the trusted party.

More detaiils see Authorization@Edge – How to Use Lambda@Edge and JSON Web Tokens to Enhance Web Application Security.

Sources and Targets of DMS

Sources

Cross-Region Snapshot Copy for RDS and EBS

There is no charge for the copy operation itself; you pay only for the data transfer out of the source region and for the data storage in the destination region.

Stop and Re-start Elastic Beanstalk on a schedule

- Lambda

- Need the Elastic Beanstalk ID

- Need to assume a role with

AWSElasticBeanstalkFullAccespolicy

- CloudWatch Event on a cron schedule

S3 object-level logging with CloudTrail

CloudTrail supports logging Amazon S3 object-level API operations such as GetObject, DeleteObject, and PutObject.

S3 event notifications

Targets

- SNS

- SQS

- Lambda

CloudWatch Events is not supported.

Limitation

Does not act on failed operations.

Filtering

Can filter based on key suffix/prefix

Real-time Processing of Log Data with Subscriptions

- Subscriptions

- Real-time feed of log events from CloudWatch Logs

- Delivered to:

- Amazon Kinesis stream,

- Amazon Kinesis Data Firehose stream,

- AWS Lambda

- Steps:

- Create the receiving resource

- Create a subscription filter: what log events get delivered and where to send them

- Each log group can have up to two subscription filters associated with it.

AWS Batch user case: Digital Media

- AWS Batch provides content producers and post-production houses with tools to automate content rendering workloads and reduces the need for human intervention due to execution dependencies or resource scheduling.

- This includes the scaling of compute cores in a render farm, utilizing Spot Instances, and coordinating the execution of disparate steps in the process.

AWS Single Sign-On

- Simplifies managing SSO access to AWS accounts and business applications.

- Across AWS accounts in AWS Organizations.

AWS Directory Service for AD - Trust Relationship

- One or two-way

- External or forest trust

- Between your AWS Directory Service for Microsoft Active Directory and on-premises directories

- Between multiple AWS Managed Microsoft AD directories in the AWS cloud

Use self-created authentication and IAM for authorization

- Create SAML 2.0 based authentication and use AWS Organizations SSO for authorization

- Adding OIDC (Open ID Connect) Identity Providers to a Cognito User Pool

Moving an EC2 instance into a placement group

- Stop the instance using the

stop-instancescommand. - Use the

modify-instance-placementcommand and specify the name of the placement group to which to move the instance:aws ec2 modify-instance-placement --instance-id i-0123a456700123456 --group-name MySpreadGroup - Start the instance using the start-instances command.

CloudFormation Change Set

- Preview how proposed changes to a stack might impact your running resources.

- AWS CloudFormation makes the changes to your stack only when you decide to execute the change set.

CloudFormation DeletePolicy attribute

Allowed values:

- Retain: resource retains but not in the CloudFormation’s scope.

- Snapshot: supports EC2 volume, ElastiCache, Neptune, RDS, Redshift

- Delete (default, except for

AWS::RDS::DBClusterandAWS::RDS::DBInstance)

Deploy new AMI to resources defined with CloudFormation

Stand-alone EC2 instance

- Create your new AMIs containing your application or operating system changes.

- Update your template to incorporate the new AMI IDs.

- Update the stack, either from the AWS Management Console or by using the AWS command

aws cloudformation update-stack. - When you update the stack, AWS CloudFormation detects that the AMI ID has changed. It launches a new instance with the new AMI, point the other resources to the new instance, and remove the old instance.

Let Auto-Scaling Group use a new AMI

- Instance type and AMI info are encapsulated in the Auto Scaling launch configuration.

- Edit the

AWS::AutoScaling::LaunchConfigurationresource in the template. - Changing the launch configuration does not impact any of the running Amazon EC2 instances in the Auto Scaling group. An updated launch configuration applies only to new instances that are created after the update.

- If you want to propagate the change to your launch configuration across all the instances in your Auto Scaling group, you can use an

UpdatePolicyattribute.

RDS Event Notification

Amazon RDS uses the Amazon Simple Notification Service (Amazon SNS) to provide notification when an Amazon RDS event occurs.

The event includes RDS failure. Therefore you can:

Configure an Amazon RDS event notification to react to the failure of the database in us-east-1(Aurora master region) by invoking an AWS Lambda function that promotes a read replica in another region.

DataSync v.s. Fire Gateway configuration of AWS Storage Gateway

- Use AWS DataSync to migrate existing data to Amazon S3

- Subsequently use the File Gateway configuration of AWS Storage Gateway to retain access to the migrated data and for ongoing updates from your on-premises file-based applications.

Receiving CloudTrail logs from Multiple Accounts into a single S3 bucket

- Turn on CloudTrail in account A, where the destination bucket will belong. Do not turn on CloudTrail in any other accounts yet.

- Update the bucket policy on your destination bucket to grant cross-account permissions to CloudTrail.

- Turn on CloudTrail in the other accounts you want CloudTrail logs delivered. Configure CloudTrail in these accounts to use the same bucket belonging to account that A you specified in step 1.

AWS Systems Manager

Session Manager

- Supports Port Forwarding

- browser-based

- Log commands to S3 or Amazon CloudWatch logs using CloudTrail

Run Command

- Do not need to log into servers

- Replaces SSH - No SSH

- Simply send commands

- All actions recorded by CloudTrail

AWS Certificate Manager - Regions

- To use a certificate with Elastic Load Balancing for the same fully qualified domain name (FQDN) or set of FQDNs in more than one AWS region, you must request or import a certificate for each region.

- To use an ACM certificate with Amazon CloudFront, you must request or import the certificate in the US East (N. Virginia) region.

Routing traffic to CloudFront

Use alias record.

Aurora cross-region replication: Logical vs Physical replication

You can set up cross-region Aurora replicas using either physical or logical replication.

Physical Replication = Aurora Global Database

- Dedicated infrastructure

- Low-latency global reads

- Disaster recovery

- Replicate to up to five secondary regions with typical latency of under a second.

Logical Replication

- Replicate to Aurora and non-Aurora databases, even across regions

- Based on single threaded MySQL binlog replication

- The replication lag will be influenced by the change/apply rate and delays in network communication between the specific regions selected.

ECS Service Load Balancing with ALB

Application Load Balancers offer several features that make them attractive for use with Amazon ECS services:

- Each service can serve traffic from multiple load balancers and expose multiple load balanced ports by specifying multiple target groups.

- They are supported by tasks using both the Fargate and EC2 launch types.

- Application Load Balancers allow containers to use dynamic host port mapping (so that multiple tasks from the same service are allowed per container instance).

- Application Load Balancers support path-based routing and priority rules (so that multiple services can use the same listener port on a single Application Load Balancer).

Fargate Task Storage

For Fargate tasks, the following storage types are supported:

- Amazon EFS volumes for persistent storage.

- Ephemeral storage for nonpersistent storage.

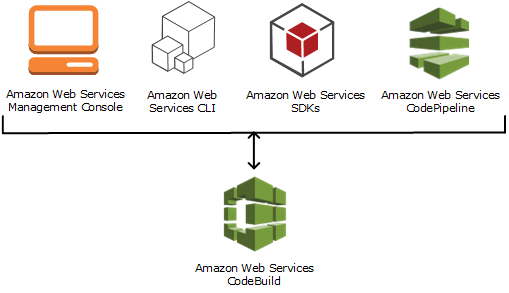

CodeBuild, CodePipeline, CodeDeploy

How CodeDeploy works

AWS CodeDeploy is a fully managed deployment service that automates software deployments to a variety of compute services such as:

- Amazon EC2,

- AWS Fargate,

- AWS Lambda, and

- your on-premises servers.

- Deploys to any instance, including EC2.

- The CodeDeploy agent must be installed and running on each instance.

- How it works:

- First, you create deployable content on your local development machine or similar environment

- You add an application specification file (AppSpec file). The AppSpec file is unique to CodeDeploy. It defines the deployment actions you want CodeDeploy to execute.

- You bundle your deployable content and the AppSpec file into an archive file, and then upload it to an Amazon S3 bucket or a GitHub repository. This archive file is called an application revision (or simply a revision).

- You provide CodeDeploy with information about your deployment, such as which Amazon S3 bucket or GitHub repository to pull the revision from and to which set of Amazon EC2 instances to deploy its contents.

- CodeDeploy calls a set of Amazon EC2 instances a deployment group. A deployment group contains individually tagged Amazon EC2 instances, Amazon EC2 instances in Amazon EC2 Auto Scaling groups, or both.

- Each time you successfully upload a new application revision that you want to deploy to the deployment group, that bundle is set as the target revision for the deployment group. In other words, the application revision that is currently targeted for deployment is the target revision. This is also the revision that is pulled for automatic deployments.

- Next, the CodeDeploy agent on each instance polls CodeDeploy to determine what and when to pull from the specified Amazon S3 bucket or GitHub repository.

- Finally, the CodeDeploy agent on each instance pulls the target revision from the Amazon S3 bucket or GitHub repository and, using the instructions in the AppSpec file, deploys the contents to the instance.

Real Example: Automated AMI Builder

CodeBuild

AWS CodeBuild is a fully managed continuous integration service that:

- compiles source code,

- runs tests, and

- produces software packages

Running CodeBuild

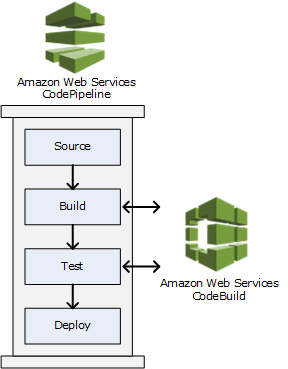

AWS CodePipeline is a fully managed continuous delivery service that helps you automate your release pipelines for fast and reliable application and infrastructure updates.

- CodeBuild Console

- CodePipeline Console

- CLI or SDK

CodeBuild as a build or test action of a CodePipeline pipeline

CodePipeline

AWS CodePipeline integrates with AWS services such as:

- AWS CodeCommit,

- Amazon S3,

- AWS CodeBuild,

- AWS CodeDeploy,

- AWS Elastic Beanstalk,

- AWS CloudFormation,

- AWS OpsWorks,

- Amazon ECS,

- AWS Lambda

AWS IoT Core

AWS IoT Core provides secure, bi-directional communication for Internet-connected devices (such as sensors, actuators, embedded devices, wireless devices, and smart appliances) to connect to the AWS Cloud over MQTT, HTTPS, and LoRaWAN.

ELB Routing algorithm

ALB

The load balancer node that receives the request uses the following process:

- Evaluates the listener rules in priority order to determine which rule to apply.

- Selects a target from the target group for the rule action, using the routing algorithm configured for the target group.

- The default routing algorithm is round robin.

- Routing is performed independently for each target group, even when a target is registered with multiple target groups.

Routing ALgorithms supported by ALB

Round Robin

Round robin load balancing is a simple way to distribute client requests across a group of servers. A client request is forwarded to each server in turn. The algorithm instructs the load balancer to go back to the top of the list and repeats again.

Least Outstanding Requests

A “least outstanding requests routing algorithm” is an algorithm that choses which instance receives the next request by selecting the instance that, at that moment, has the lowest number of outstanding (pending, unfinished) requests.

NLB

- Selects a target from the target group for the default rule using a flow hash algorithm. It bases the algorithm on:

- The protocol

- The source IP address and source port

- The destination IP address and destination port

- The TCP sequence number

- Routes each individual TCP connection to a single target for the life of the connection. The TCP connections from a client have different source ports and sequence numbers, and can be routed to different targets.

Classic Load Balancer

- Uses the round robin routing algorithm for TCP listeners

- Uses the least outstanding requests routing algorithm for HTTP and HTTPS listeners

AWS Batch Scheduling

The AWS Batch scheduler evaluates when, where, and how to run jobs that have been submitted to a job queue. Jobs run in approximately the order in which they are submitted as long as all dependencies on other jobs have been met.

DynamoDB Continuous Backup

- You can enable continuous backups with a single click in the AWS Management Console, a simple API call, or with the AWS Command Line Interface (CLI).

- DynamoDB can back up your data with per-second granularity and restore to any single second from the time PITR was enabled up to the prior 35 days.

Secrets Manager - rotating RDS password

- Because each service or database can have a unique way of configuring secrets, Secrets Manager uses a Lambda function you can customize to work with a selected database or service. You customize the Lambda function to implement the service-specific details of rotating a secret.

- The

AWS::SecretsManager::RotationScheduleresource configures rotation for a secret.